Project Rafiki

Designed an AR experience aimed to understand the evolution of human gestures as a result of interacting with technology.

Team:

Vanshika Sanghi, Rashi Balachandran, Arsh Ashwani, Abhijeet Balaji, Janaki Syam, Chaitali, Ananya Rane, Sohayainder Kaur

Duration:

10 days

Overview

About the project

The influence of technology that we use daily has created a direct impact on our natural behaviour. The rapid evolution of technology has forced humans to lose their existing traditional bodily practices while constantly adapting to present-day screen-based technology. Digital interfaces and screen-based technology guide our bodily gestures and interactions so deeply that it has become unclear what drives our intuition.

The project tries to question the concepts of embodied interactions, the overlap of physical and digital spaces, the implications of future technology on human behavior and the role of humans in making decisions in a digital world.

Research

Understanding methods / tools

Open CV and ArUco Markers

OpenCV is a library including functions that help with realtime computer vision. ArUco is a library that enables us to access binary square fiducial markers that can be used to pose estimation. By employing these markers in our project, we could use OpenCV to help detect the presence of the markers ergo allowing some algorithm to function when detected.

Define

Creating Context

Technology creates a huge impact on humans because of which a lot of interactions stem from the technology itself rather than the human.

With further advancements in technology, we are losing our natural bodily practices while adapting to screen-based technologies. We discussed and tried to identify how technology has changed these interactions and the concept of intuition and what it means to us.

Taking inspiration from Paul Dourish’s work and we defined embodied interaction for ourselves and took it up as the basis for our project to study embodied interactions and how to preserve these gestures.

What are embodied interactions?

Embodied interaction involves the manipulation, creation and communication of meaning through the engagement of the human body with artefacts enabled by/for computation. It is a cross between social

computing and tangible computing.

Insights

Research Questions

Ideation

Brainstorming on Concepts

We used brainstorming methods such as scamper and crazy 8's to come up with as many possible solutions as we could. We timed out sessions and discussed our ideas with each other. From all the ideas we picked a few that had the potential to showcase embodied interactions. We grouped the choices according to the categories they fit in and continued the elimination process. We then voted on which idea would be the best to move forward with.

Concept

What is Project Rafiki?

The exploration was done in the form of an AR experience room where users would be able to paint in virtual spaces. Using MS paint as the platform and tools enabled by ArUco markers, users would be able to use these bodily gestures in order to paint. The users would have full spacial and bodily freedom to complete tasks that are familiar from MS paint, which would help them emulate natural and intuitive gestures

The experience room would help us observe human behaviour and feedback when digital interfaces of paint and traditional gestures of painting were controlled by bodily gestures.

Prototyping

Assembling the Experience room

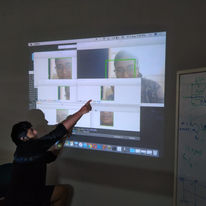

The prototype for the experience room used OpenCV python for execution. Each of the tools required a specific algorithm which was coded separately and then integrated. We used multiple ArUco markers and linked each ID to its corresponding tool.

Gestures were predicted with each function of the ArUco marker and how the interaction would take place.

Predicted gestures for ArUco markers

Gestures were predicted with each function of the ArUco marker and how the interaction would take place.

Gesture for the paintbrush tool

involves a full range of movement

The gesture associated with erasing on paper was incorporated in the functionality of the eraser tool

1. The Aruco marker shown by the user is read as an image by the camera using the OpenCV Library.

2. Using the Aruco Marker Dictionary, the shown marker gets detected.

3. The functionality associated with the detected marker is displayed on the screen.

A 3D model of the case study; the experience room

Testing

Key Findings

It was assumed that the usage and functionality of the tools would come intuitively to the user due to familiarity with the platform but with the new modality, it was noticed that it was harder for the users to make the connection, which brought up the question of has technology truly changed intuition?

It took explanation from time to time to switch from one tool to another. People were slow in their movements as our code couldn't respond at the same pace at which the users were moving, but that made them stop and think. Even with the abundant availability of space users choose to remain static, which could stem from their past interactions with 2D screens. It was interesting to see the different ways in which users decided to use their bodies to collaborate and create art in the experience room.

Conclusion

Future Scope

The next step for Project Rafiki would be to conduct further testing along with feedback sessions to better understand why users respond to it the way that they do. Another way could be to explore different use cases to explore the extent of the project and see if the context affects the results. Some of these use cases could be education, medicine, sports, etc.

This particular case study could be expanded to a three-dimensional canvas, where the user would be able to make use of the surrounding environment to its fullest extent. Adapting more tools would enable the enhancement of the experience and

might be able to open the user to understanding different gestures

This project also got selected for the Late-Breaking work for the India HCI, conference 2019, Hyderabad

Here are some fun posters for our exhibition.

Sohayainder Kaur, Vanshika Sanghi,Rashi Balachandran

Abhijit Balaji

Janaki Syam, Arsh Ashwini Kumar

Chaitali, Ananya