Project Rafiki

Designed an AR experience aimed to understand the evolution of human gestures as a result of interacting with technology.

Project Details

Team:

Vanshika Sanghi, Rashi Balachandran, Arsh Ashwani, Abhijeet Balaji, Janaki Syam, Chaitali, Ananya Rane, Sohayainder Kaur

Duration:

10 days

Introduction

Since we are children we lear a lot through the tools we are provided. A child will learn differently if they are given toys to play with compared to a child with an ipad. Due to the increased accessibility and ease of owning and using technology, there is a lot that has changed about our inherent bodily gestures, and what comes intuitively to us. Digital interfaces and screen-based technology guide our bodily gestures and interactions so deeply that it has become unclear what drives our intuition.

The project tries to question the concepts of embodied interactions, the overlap of physical and digital spaces, the implications of future technology on human behavior and the role of humans in making decisions in a digital world.

Concept

Taking inspiration from Paul Dourish’s work§ and using that as a starting

point for Project Rafiki. From there our core question became,

Can embodied interactions and their gestures be preserved?

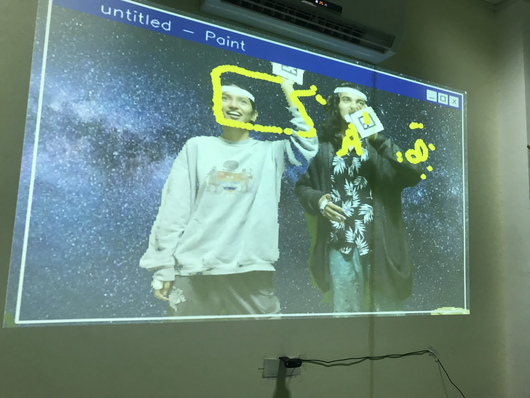

This exploration was done in the form of an experience room for painting

in virtual spaces. The aim was to to observe human behavior, reactions, and feedback when they engaged with the AR experience.

Technology creates a huge impact on humans because of which a lot of interactions stem from the technology itself rather than the human.

Using the OpenCV bindings for Python and working with Aruco Markers to create a real-life MS Paint experience room was used to study embodied interactions, their difference from modern-day gestures and their impact on humans and their possible future.

As part of the experience room, various tools were included, allowing the user full spatial and bodily movement, that were inspired by familiar tools used in MS Paint, and tried to emulate natural and intuitive gestures.

Gestures:

Gesture for the paintbrush tool

involves a full range of movement

The gesture associated with erasing on paper was incorporated in the functionality of the eraser tool

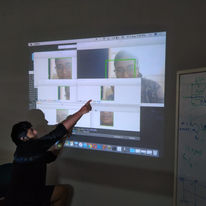

1. The Aruco marker shown by the user is read as an image by the camera using the OpenCV Library.

2. Using the Aruco Marker Dictionary, the shown marker gets detected.

3. The functionality associated with the detected marker is displayed on the screen.

Ideation

Before we started working on this project we knew we wanted to explore the area of gesture recognition and embodied interactions, with the open CV library in python.

On those guidelines, we narrowed down some questions and began to ideate using methods like SCAMPER and crazy 8's.

We picked and chose the best possible ideas and arranged them on google jam board

We categorized those further, grouping ideas under the themes they fell into.

We further categorized them according to the team members' preferences and then took a vote.

After debating different ways of doing either of the tasks we concluded in an interactive space, which allowed one to paint using gestures. Our aim was to try and simulate the feeling of being inside MS paint.

Research Questions:

"

"

Embodied interaction involves the manipulation, creation and communication of meaning through the engagement of the human body with artefacts enabled by/for computation. It is a cross between social

computing and tangible computing.

§ Where The Action Is : The Foundations of Embodied

Interaction by Paul Dourish

Observations and Findings

It was assumed that the usage and functionality of the tools would come intuitively to the user due to familiarity with the platform but with the new modality, it was noticed that it was harder for the users to make the connection, which brought up the question of has technology truly changed intuition?

-

It took explanation and some time to switch from one tool to the other, to draw properly without any breaks in line but that could also be because of the limitations of this technology

-

It was hard for the technology to work at the same pace at which users were moving which considerably slowed the users down, but it also made them think about their actions more.

-

Most users were also observed to remain static which could stem from their past interactions of using digital interfaces that are usually seen on 2D screens from the user's perspective

-

It was interesting to see different users collaborating in different ways and using the body in various ways, like the usage of their head and legs too.

A 3D model of the case study; the experience room

It shows how this technology could be implemented to have further implications and it also opens up the question of humans control and personalization.

Conclusion and future scope

After running and testing the project over two days with multiple people, the team largely gathered that it was not as intuitive for people to work in such spaces as expected to be. Probably technology has helped users adapt in ways of doing which is informed by the function trying to be performed. This led to the realization that in today’s time, digital technology has been integrated so deeply into our body language that“intuitive gestures” are now influenced by both physical and digital actions.

The next step for Project Rafiki would be to conduct further testing along with feedback sessions to better understand why users respond to it the way that they do. Another way could be to explore different use cases to explore the extent of the project and see if the context affects the results. Some of these use cases could be education, medicine, sports, etc.

This particular case study could be expanded to a three-dimensional canvas, where the user would be able to make use of the surrounding environment to its fullest extent. Adapting more tools would enable the enhancement of the experience and

might be able to open the user to understanding different gestures

This project also got selected for the Late-Breaking work for the India HCI, conference 2019, Hyderabad

Here are some fun posters for our exhibition.

Sohayainder Kaur, Vanshika Sanghi,Rashi Balachandran

Janaki Syam, Arsh Ashwini Kumar

Abhijit Balaji

Chaitali, Ananya